Why Inter-Rater Reliability Is Crucial in Clinical Research?

Inter-rater reliability (IRR) is an important factor in clinical research, which helps to reduce variability when different researchers analyze the same data. High IRR also ensures that different raters will come up with similar conclusions, making it possible to have valid and credible results. If IRR is not well-defined and measured, then the conclusions derived from research may be doubtful and results inconclusive. This article seeks to outline some of the features that define IRR, the ways in which IRR can be assessed and optimized, the challenges inherent in attaining a high IRR, and the ways in which technology can be utilized to optimize this critical element of clinical research.

The Impact of Inter-Rater Reliability on Research Validity

Inter-rater reliability (IRR) is a critical factor that explains how valid clinical research outcomes are. When two or more researchers or raters are involved and are reviewing the same data, a high IRR implies that they are making similar conclusions. This consistency is essential in order to obtain outcomes that are accurate and can be further reproduced by other researchers. If IRR is low, it can be assumed that the results are overruled because inconsistencies in the data denote that the results can be subjective rather than based on objective facts. For instance, studies with low IRR provide ambiguous results, thus raising questions about the authenticity of the studies conducted. High IRR guarantees that research results are accurate, stable, and represent actual clinical results, thus making it a prerequisite for credible research.

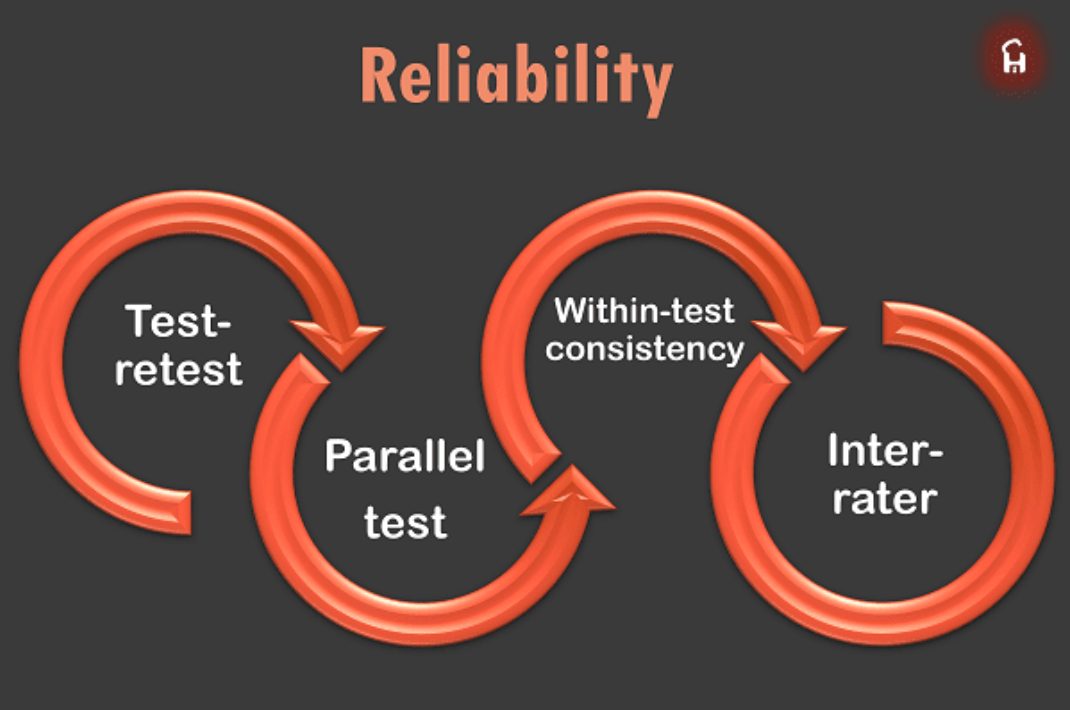

Methods to Measure and Improve Inter-Rater Reliability

It is important to assess the IRR and enhance the reliability of clinical investigations, especially across different raters. IRR can be evaluated statistically by applying Cohen’s Kappa, which measures agreement between raters, and the Intraclass Correlation Coefficient, which evaluates the consistency of ratings across different raters. To improve IRR, training and calibration of staff are very important in an organization. Such sessions inform all the raters of the criteria to be used and also enable them to apply this criterion in the same manner. The constant recalibration during the study also ensures that the achieved or targeted high IRR is maintained even as the research continues. By employing the aforementioned techniques, one is able to have good IRR so that the results from one study are comparable, accurate, and generalizable with other studies.

Challenges in Achieving High Inter-Rater Reliability

It is often not easy to obtain high IRR value in clinical research because of the following reasons. One main weakness is that subjective judgments are likely to be inconsistent due to the fact that raters may interpret data in various ways. This also leads to variations in the assessment, which can be tiresome in an effort to standardize the evaluation. Another problem is researcher bias, whereby the researcher may have a certain implicit belief or expectation that will alter the scores. Some of these issues can compromise the credibility of the results obtained from the study. Nevertheless, it is possible to counter these challenges. This can be done by elaborating on the guidelines, staff training, and calibration, which results in maintaining IRR at a high level and assuring higher reliability and validity of the outcomes.

The Role of Technology in Enhancing Inter-Rater Reliability

In the field of clinical research, technology is vital for improving inter-rater reliability (IRR). It is also possible to create a significant amount of digital tools and software that would allow rating assessment results according to the same indicators and agreed-upon criteria. For instance, electronic data collection platforms help to reduce the variability of data collection by leading raters through a structured form. AI and machine learning can also enhance IRR as they reduce the likelihood of human complacency and analyze patterns objectively. These technologies can identify patterns and highlight discrepancies and can lead raters to reconsider their scores. The use of these technological solutions can help you enhance IRR by as much as twenty-five percent and advance the credibility of your research.

Conclusion

Inter-rater reliability (IRR) is a crucial and essential factor in generating credible clinical research. High IRR means that research findings are accurate, reliable and easily replicated and hence considered credible within the scientific community. While it may be hard to reach high levels of IRR, proper measurement methods, assured rater training, and integration of technology can greatly enhance IRR scores. Therefore, the role of IRR remains critical as clinical research further progresses as a field and sub-specialty. By focusing on enhancing IRR, researchers can ensure that their studies contribute meaningfully to the field and support the advancement of reliable healthcare practices.